Extract SAP data to Azure Data Lake for scale-out analytics in the cloud

/Background

With the rapid growth of enterprise data especially to the SAP shops, scalable analytics is more important than ever to business decisions and future business planning. More and more customers are looking into scalable big data platforms for the analytics solutions, in order to get results in timely manner.

Since the debut 3 years ago, Azure Data Lake (ADL), as one of the flagship big data offerings from Microsoft, has gained more and more traction. According to Microsoft, "Azure Data Lake Store is an enterprise-wide hyper-scale repository for big data analytic workloads". However, there has not been clear guidance in industry on how to couple SAP data and ADL together productively. Lots of existing solutions are still fragmented, with limited features, multiple data staging hops, and manual steps involved.

Here we will explain an End-to-End solution to extract large volume SAP data into Azure Data Lake Store (ADLS), by leveraging the very powerful and mature SQL Server Integration Services (SSIS) from Microsoft. On the data destination side, we will leverage Azure Feature Pack for SSIS. On the data source side, we will leverage AecorSoft Integration Service. For the huge SSIS user base worldwide, this can be the best approach to maximize their existing investment in SSIS and bring the most value out of SAP data. For the prospective Azure Data Lake customers who are unfamiliar with SSIS, this solution can be a very promising option to add to their future roadmap of scalable analytics.

High Level View

Prerequisite

There are three pillars of the solution:

- The engine: SQL Server Integration Services.

- The Target enabler: Azure Feature Pack for SSIS.

- The Source enabler: AecorSoft Integration Service for SSIS.

Notice that for non-SQL Server users who want to explore, please sign up Visual Studio Dev Essentials at Microsoft website and download SQL Server Developer Edition for free, which comes with SSIS and Visual Studio shell (to develop SSIS projects). Notice the developer edition free license is not for production use.

Both AecorSoft Integration Service for SSIS and Azure Feature Pack for SSIS support SSIS 2012, 2014, 2016, 2017.

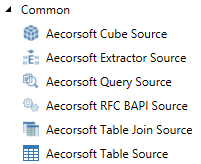

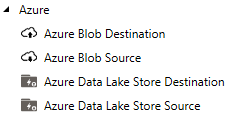

Once installed, the components will show up in Visual Studio's SSIS Toolbox:

When the SSIS project is created in Visual Studio, we need to ensure the SSIS project version matches the SSIS engine version. For example, if the SSIS engine is 2014, then the SSIS project needs to target at SQL Server 2014. In case there is need to switch to another version instead of the default one, we will need to install SQL Server Data Tools (SSDT) which offers backward compatibility. The details can be found in plenty of other blogs online.

Step-by-Step Configuration

The workflow is: Source Connection Manager --> Source Component --> Destination Connection Manager --> Destination Component.

Source Connection Setup

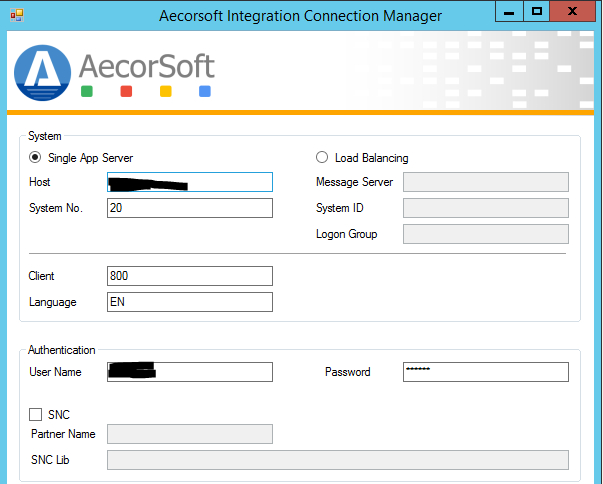

In the Connection Manager area, right click and choose "New Connection", and then choose "AISAP".

Then, in the dialog window, fill in the system information. Load Balancing and Single Sign-On are supported as well.

Source Component

There are six types of supported SAP objects: Table, Table Join, Query, BAPI/RFC, Extractor, and InfoCube/BEx. We will use the Table object as the example, and we choose the General Ledger header table BKPF.

Filters can be defined by right clicking on field names. Column order can be re-arranged through drag-n-drop too.

Destination Connection Manager

Setting up the connection to Azure Data Lake needs a little work. In the New Connection, we choose

Then, in the next dialog window, we need to ensure SSIS is authorized to access ADLS.

First, we need to fill in the required information. Here, ensure the user name is the work ID/email which is available in Azure AD and could be in the style of "<user>@<companyname>.onmicrosoft.com", not the personal ID/email. To double check the work ID, log into Azure portal, and check the user in AAD section. It is very important that only the accurate work ID works here,

Next, we need to click on "Test" button to bring up a popup authentication window, which asks for permission to allow SSIS to access ADLS. Click "Yes", and the "Test connection succceeded!" message will show up. This popup window is only one-time to permit SSIS application.

Destination Component

Now, drag and drop the "Azure Data Lake Store Destination" to the Visual Studio data flow canvas.

The "File Path" here actually should be "Full path and file name". For example, here we have created a folder "SAP" in ADLS web portal, and we want to save the data to file "BKPF". We put "SAP/BKPF" here.

Now, a basic SSIS flow is set up.

Execution

There are two ways to execute SSIS package: the GUI interactive way in Visual Studio, and the command line interface way via dtexec command.

GUI way

The job can be executed directly from Visual Studio.

The advanced algorithms in AecorSoft product brings up superb performance at SAP data extraction, and such performance is critical to the enablement of real-time IT. The example shown here is from SSIS running on a laptop and SAP running in a VM on a quad core i7 CPU PC.

Command Line way

It is important to choose the correct dtexec.exe to execute the dtsx package on command line.

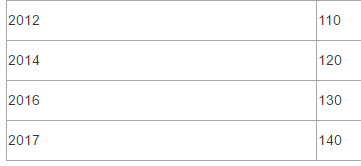

The example shows the dtexec.exe under subfolder "120". The mapping is:

Conclusion

By using SSIS, AecorSoft Integration Service for SSIS, and Azure Feature Pack for SSIS, we are now able to productively integrate SAP with ADLS, and achieve very high performance, which is critical to the large volume SAP data extracts. The data is streamed from SAP to ADLS, all through memory, and there is no intermediate data hop or disk staging. All the SAP data accesses comply with SAP security and only go through SAP application layer.

With this pattern we can also handle other SAP objects like extractors and BW cubes in very high performance too, faster or even multiple times faster than other solutions on market.

Once data is in ADLS, advanced analytics and machine learning algorithms can be applied to truly make the most out of the data.